Submitting Website to Search Engines

Introduction

Search engines such as Google, Bing and Yahoo, continuously crawl the web to discover new content.

- In theory, they can find your pages through backlinks alone from other indexed pages, so manual submission is not mandatory.

However, manually submitting your website is still highly recommended for several reasons:

- Peace of mind

- Submitting your site and providing a sitemap ensures that search engines can properly discover and understand your website’s structure.

- This helps confirm that your pages are eligible for indexing and visibility in search results.

- Access to optimization tools

- By verifying your site in tools like Google Search Console or Bing Webmaster Tools, you unlock access to performance reports, index coverage status, keyword impression data, mobile usability diagnostics and core web vitals.

- These insights can help you identify issues and optimize your site for better search performance.

From personal experience, many blog posts may appear as “discovered” or “crawled” but are not indexed by Google. This is a common situation, especially with newer or lower-authority websites.

- In both Google Search Console and Bing Webmaster Tools, you can use the URL Inspection Tool to check the current indexing status of a page and request re-indexing after content updates or fixes.

How to Submit Your Website to Search Engines

The two most important platforms to submit your website to are Google Search Console and Bing Webmaster Tools.

- These platforms not only help search engines discover and index your website but also provide valuable tools for monitoring and improving your site's performance.

- For Google Search Console, click "Add Property" and choose Domain property (recommended, as it covers all subdomains and protocols, like https, http, www. etc). Then, verify your ownership via your domain DNS or HTML meta tag.

- For Bing Webmaster Tools which cover both Bing and Yahoo search engines, you may choose "Import from Google Search Console" for automatic verification.

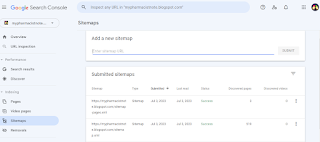

Once website ownership is verified, go to Sitemaps in the left menu and paste your sitemap URL (e.g. https://yourdomain.com/sitemap.xml).

- You can create a sitemap manually, use static site generator plugins or rely on online sitemap generation tools such as xml-sitemaps.com - especially useful for static websites without a dynamic backend.

NOTE: In addition to manually submitting your sitemap in each webmaster tool, a common best practice is to declare its location in your robots.txt file. By adding the line Sitemap: https://yourdomain.com/sitemap.xml to your robots.txt, you provide a clear, automated signal to any compliant search engine crawler that discovers your site, telling it exactly where to find your sitemap.

Specific Setup For Blogger Users

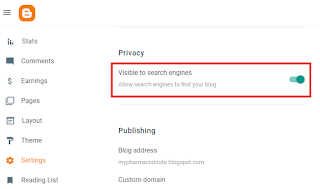

In Blogger > Settings, make sure:

- Visible to Search Engines is turned on.

- This allows your site to be crawled and indexed properly.

Blogger auto-generates sitemaps.

- Blogger posts: https://yourblog.blogspot.com/sitemap.xml

- Blogger static pages (e.g. About, Contact): https://www.yourblog.blogspot.com/sitemap-pages.xml

After Submission: What Happens Next?

- Site authority and trust

- Content quality and originality

- Crawlability and site structure

- Internal linking and sitemap clarity

After this initial analysis completed, you can

- Monitor indexing status

- Use the Pages section under Indexing in Google Search Console or Site Explorer in Bing Webmaster Tools to check which URLs are indexed, excluded and the reasons why.

- Track search performance

- Review the Performance report in Google Search Console and the Search Performance report in Bing Webmaster Tools.

- Use the URL Inspection Tool

- To check crawl and indexing status of individual URLs

- To request reindexing for new or updated content

NOTE: Both Google and Bing enforce a daily quota for manual URL submission through the URL inspection tools.

Search Console Insights

Search Console Insights is a simplified and user-friendly dashboard designed for content creators, bloggers and small site owners to quickly understand how their content is performing without needing to navigate complex analytics dashboards.

It combines data from Google Search Console and Google Analytics to give you quick answers to questions like:

- How is your content performing on Google Search?

- Which pieces of content are gaining traction or growing?

- What are your most popular content?

- How are people finding your site by most searched or trending queries?

Bing Webmaster Tools-Specific Features

IndexNow, supported by Bing, Naver, Yandex and Yep, is a protocol that instantly notifies search engines when content is created, updated or deleted.

Microsoft Clarity is a free user behavior analytics tool that integrates with Bing Webmaster Tools.

- It offers session recordings, heatmaps, scroll depth, rage/dead clicks and browser/device statistics.

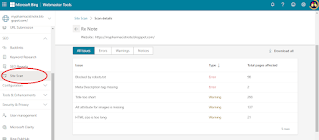

Site Scan in Bing Webmaster Tools is an on-demand site audit tool that crawls your site and check for common technical SEO issues.

- To start a new scan, go to the Site Scan feature in Bing Webmaster Tools and click on "Start new scan" button.

- After the scan is complete, you will get a report with the issues found, their severity and how to fix them (such as missing meta description tag or image ALT tag).

NOTE: Ahrefs provides a more comprehensive site audit than Bing Webmaster Tools.

Summary

Submitting your website to a search engine does not guarantee indexing or high rankings. Search engine algorithms consider many factors when deciding whether and where to rank a page, including:

- Content quality and relevance

- User intent and location

- Mobile usability and site speed

- Backlinks and authority

- Keyword usage and topical coverage

With the rapid advancement of AI chatbots, more people than ever are turning to them for quick answers and information.

- While these tools do not always provide 100% accurate responses, they often deliver logically sound answers backed by vast amounts of data and knowledge.

- This shift has changed how users consume information.

- Instead of relying solely on traditional search engines or visiting individual websites, users increasingly interact with AI to get instant summaries or guidance.

As a result, the motivation for publishing content online has started to shift: Website owners are no longer just focused on sharing information.

- They are increasingly focused on building brand reputation, offering services and creating unique value that goes beyond what AI can replicate.

- Providing first-hand experience, trustworthy services and authentic perspectives has become more critical than ever.

There is also an ongoing debate in the web community around whether site owners should block AI bots from crawling their content.

- Arguments for allowing AI crawling:

- Potential exposure in AI-generated answers

- Drive indirect traffic through citation or brand mentions

- Support open access to information

- Arguments against allowing AI crawling:

- AI may repurpose your content without credit or traffic return

- Loss of control over how your content is used

- Competitive concerns for publishers relying on ad revenue or subscriptions

Comments

Post a Comment